Table of Contents

The fraud landscape in 2025 has reached an alarming level of sophistication. Criminals are leveraging AI-powered deepfakes, romance-driven crypto schemes, and fake travel offers to deceive people and businesses worldwide. Below, we explore the five most significant scams this year—each supported by real data, expert sources, and clear prevention strategies.

1. AI-Powered Deepfakes: When Business Meetings Become Illusions

One of the most shocking scams this year occurred in Hong Kong, where an employee at a multinational firm was tricked into transferring HK$200 million during what they thought was a routine Zoom call with their CFO and team. In reality, every participant was a synthetic clone created with deepfake video and audio. This case, covered by CNBC, highlighted the terrifying new era of fraud.

A similar attack hit engineering firm Arup, where a finance worker transferred $25 million after speaking to what they believed was their CFO—again, a fake constructed with AI, as reported by Eftsure. The Australian Government Counter Fraud team also confirmed a surge in deepfake scams, warning that synthetic media now bypasses traditional security checks. These high-level impersonations show how AI has weaponized social engineering.

2. Pig-Butchering Scams: Crypto Fraud Meets Romance

So-called “pig butchering” scams have exploded across Asia, Europe, and North America. Scammers build fake romantic relationships with victims, often through dating apps or WhatsApp, then gradually introduce them to fraudulent crypto investment platforms. Once the victim deposits money, the scammer disappears—leaving behind emotional and financial devastation.

Chainalysis reported that romance scams are among the most damaging forms of crypto crime, with this category growing 85x since 2020. A single fraud operation linked to these scams funneled $10.5 million in stolen funds using AI-generated social media profiles. This type of scam is covered in more depth on our Scam Knowledge Hub.

3. Bank Impersonation Scams: Deepfake Audio Attacks Financial Systems

Banks have become prime targets for deepfake-enabled fraud. In 2025, scammers began using AI-generated voices and forged documents to impersonate bank officials and authorize account access. According to HK Lawyer and Deacons, criminals now use stolen ID cards and voice-cloning software to set up fake bank accounts or conduct phishing scams by phone.

The method works because many authentication processes still rely on basic biometric cues like voice recognition. Victims are often contacted via fake customer service calls, believing they’re speaking with real representatives. These scams are difficult to trace and often go undetected until major financial damage occurs.

4. Crypto Investment Scams: Fake Platforms, Real Losses

The crypto space in 2025 is more dangerous than ever. Fraudsters are creating fake exchanges and trading platforms that look fully legitimate. Victims see what appears to be actual profit charts and support agents, but it’s all AI-generated theater. Once deposits are made, withdrawals are blocked, and the platform vanishes.

In Dubai alone, crypto-related scams are on track to surpass $12 billion in global losses, according to Gulf News. These operations use synthetic identities, stolen branding, and spoofed websites to lure investors. A number of brokers flagged on FraudReviews.net have followed this model—glossy UIs, fake reviews, and aggressive marketing with no regulatory oversight.

5. Travel and Online Purchase Scams: Deals Too Good to Be True

Online travel fraud and fake product listings have exploded in 2025, thanks to the use of AI-generated visuals, listings, and fake reviews. Scammers create cloned websites for luxury hotels, rental homes, or gadgets—complete with phony customer testimonials and booking systems. Victims only realize they’ve been scammed when the accommodation doesn’t exist or the product never arrives.

Consumer protection groups across the EU and U.S. have warned about a spike in AI-enhanced travel scams, where even real images from legitimate providers are scraped and reused in fraudulent listings. These scams exploit the high demand for last-minute travel deals and discounted luxury items, using urgency to push quick decisions.

Why Are These the Biggest Scams of 2025?

All of these fraud types share one root: advanced AI tools. Deepfake audio, video, and synthetic content are now used to automate, scale, and personalize scams. Instead of casting a wide net, scammers in 2025 are using precision tools to emotionally manipulate or impersonate victims directly. Reports from Independent Banker show that 50% of businesses have experienced deepfake fraud already—with average losses nearing $450,000.

Traditional cybersecurity models simply can’t keep up. Criminals are evolving faster than regulatory frameworks can respond.

Young Generations Are the Most Vulnerable to Scam Losses in 2025

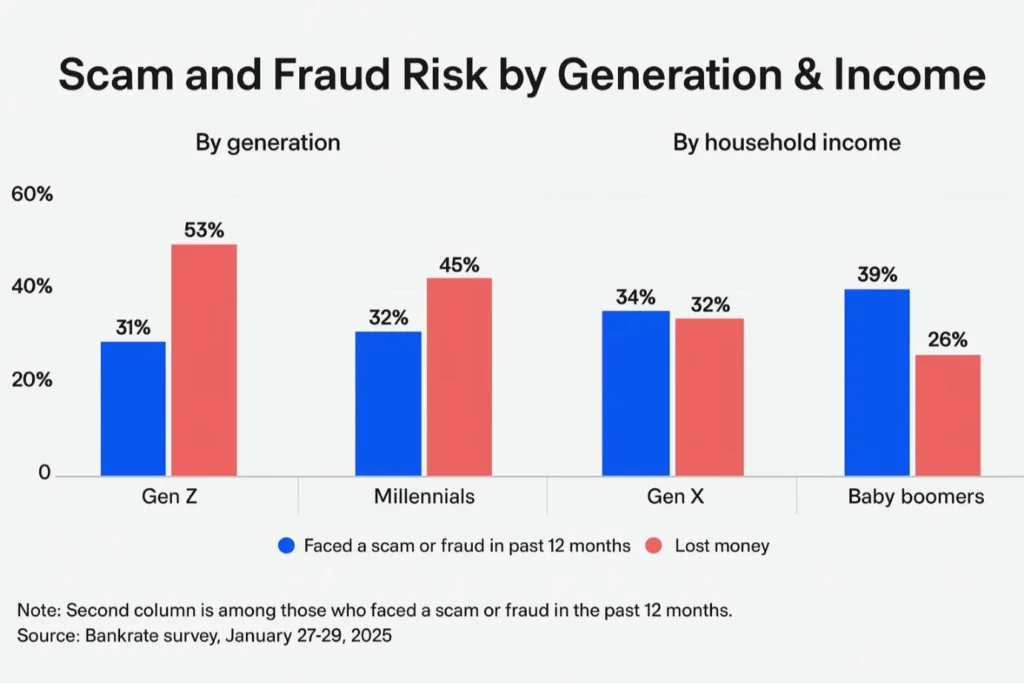

Recent survey data from January 2025 reveals a surprising shift in scam vulnerability across generations. Contrary to the common belief that older adults are the primary victims of online fraud, Gen Z and Millennials now report the highest scam loss rates, with 53% of Gen Z victims and 45% of Millennials who encountered scams confirming they lost money.

These figures suggest that younger users—who spend more time online, use digital wallets, and engage with social media—are more exposed to sophisticated fraud tactics like romance scams, phishing messages, and fake investment platforms.

Meanwhile, Baby Boomers, despite facing scams at a slightly higher rate (39%), reported the lowest loss rate at just 26%. This contrast suggests that while older generations may recognize scams more easily, younger people are more likely to fall for highly personalized or AI-enhanced frauds.

The trend underscores the importance of targeted scam education not just for seniors, but for digital-native generations who may underestimate modern scam complexity.

How to Stay Safe in an Age of AI Scams

To protect yourself, verify any financial request—especially those involving large transfers or new platforms—through a second channel. Use known phone numbers, direct contact apps, and don’t trust what you see on screen without validation. For businesses, implement strict payment authorization protocols and train employees to recognize AI-fueled impersonation tactics.